-

CentOS 7.9 에 설치 확인(2022-12-09)

-

containerd 기반 k8s 설치

-

사전준비: yum update, ssh key생성 , ansible 설치

-

install-kube-v2.yml (설정변경 및 containerd, kubeadm 등 설치)

-

master-setup.yml (kubeadm init)

-

worker-setup.yml (kubeadm join)

-

# yum upgrade in all node

yum -y upgrade

# ssh keygen in master

ssh-keygen -b 4096 -f ~/.ssh/mysshkey_rsa

# ssh key copy to worker1,2

ssh-copy-id -i ~/.ssh/mysshkey_rsa.pub root@worker1

ssh-copy-id -i ~/.ssh/mysshkey_rsa.pub root@worker2

# host명, hosts 파일 수정 -> 각 노드에서 수행

hostnamectl set-hostname master

hostnamectl set-hostname worker1

hostnamectl set-hostname worker2

# install ansible in master node

yum -y install epel-release

yum -y install ansible

ansible --version

# edit ansible-hosts 파일수정 /etc/ansible/hosts

[masters]

control-plane ansible_host={MASTER IP} ansible_user=root

[workers]

worker1 ansible_host={WORDER1 IP} ansible_user=root

worker2 ansible_host={WORKER2 IP} ansible_user=root

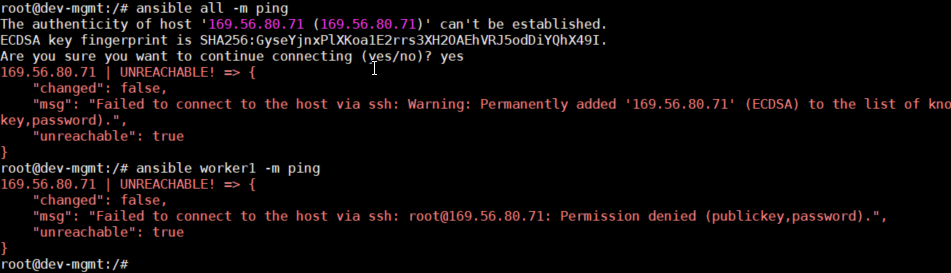

# ansible 연결 확인

ansible all -m ping

# install-kube

ansible-playbook install-kube-v2.yml

# master-setup

ansible-playbook master-setup.yml

# worker-setup -> /etc/kube_join_command 파일 확인

ansible-playbook worker-setup.yml

install-kube-v2.yaml

---

- hosts: "masters, workers"

remote_user: root

become: yes

become_method: sudo

become_user: root

gather_facts: yes

connection: ssh

tasks:

- name: Stop and disable firewalld.

service:

name: firewalld

state: stopped

enabled: False

- name: disable SELinux

shell: |

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

- name: disabling swap as its required for kubelet

shell: |

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

sudo mount -a

- name: Creating a configuration file for containerd, our container runtime

shell: |

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

- name: setting up pre-requisites for containerd

shell: |

sudo modprobe overlay

sudo modprobe br_netfilter

- name: sysctl params required by setup, params persist across reboots

shell: |

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

- name: make settings applicable without restart

command: sudo sysctl --system

# ipv4 강제로 설정

- name: set proc/sys/net/ipv4/ip_forward

shell: |

sudo sysctl -w net.ipv4.ip_forward=1

- name: installing containerd and settings its config. restart as well.

shell: |

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install -y containerd.io

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd

- name: Create a kube repo file

file:

path: "/etc/yum.repos.d/kubernetes.repo"

state: "touch"

- name: write repo information in kube repo file

blockinfile:

path: "/etc/yum.repos.d/kubernetes.repo"

block: |

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

- name: install kubernetes

shell: |

sudo yum install -y kubelet kubeadm kubectl

sudo systemctl enable --now kubelet

sudo systemctl start kubelet

master-setup.yml

- hosts: masters

become: yes

tasks:

- name: start the cluster

shell: kubeadm init --pod-network-cidr=192.168.0.0/16

args:

chdir: $HOME

async: 60

poll: 60

- name: create a new directory to hold kube conf

# ansible.builtin.file:

# path: /etc/kubernetes

# state: directory

# mode: '0755'

become: yes

become_user: root

file:

path: $HOME/.kube

state: directory

mode: 0755

- name: copy configuration file to the newly created dir

# become: true

# become_user: root

# ansible.builtin.copy:

# src: /etc/kubernetes/admin.conf

# dest: $HOME/.kube/config

# remote_src: true

copy:

src: /etc/kubernetes/admin.conf

dest: $HOME/.kube/config

remote_src: yes

owner: root

- name: set kubeconfig file permissions

file:

path: $HOME/.kube/config

owner: "{{ ansible_effective_user_id }}"

group: "{{ ansible_effective_group_id }}"

- name: Apply a calico manifset to init the pod network

# args:

# chdir: $HOME

# ansible.builtin.command: kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

become: yes

become_user: root

shell: |

curl https://raw.githubusercontent.com/projectcalico/calico/v3.24.5/manifests/calico.yaml -O

kubectl apply -f calico.yaml

args:

chdir: $HOME

- name: Get the join command to be used by the worker

become: yes

become_user: root

shell: kubeadm token create --print-join-command

register: kube_join_command

- name: Save the join command to a local file

become: yes

local_action: copy content="{{ kube_join_command.stdout_lines[0] }}" dest="/etc/kube_join_command" mode=0777

Worker-setup.yaml

- hosts: workers

become: yes

gather_facts: yes

tasks:

- name: Fetch the join command we wrote to a file in previous step and move it to the worker node.

become: yes

copy:

src: /etc/kube_join_command

dest: /etc/kube_join_command

mode: 0777

- name: Join the Worker node to the cluster.

become: yes

command: sh /etc/kube_join_command

Trouble shoot

# 오류발생하면 kubeadm reset 하고 playbook 다시 실행.

kubeadm reset

# ipv4 에러 인 경우 master, worker 노드에서 아래 명령어 실행하고 kubelet 재시작하고 playbook 다시 실행하면 됨

modprobe br_netfilter

echo 1 > /proc/sys/net/ipv4/ip_forward

'kubernetes' 카테고리의 다른 글

| Install minikube with Driver none and cri-dockerd on CentOS 9 (0) | 2024.06.07 |

|---|---|

| minikube on ubuntu (0) | 2020.04.17 |

| POD 과 local machine 간 file copy (0) | 2020.03.13 |

| ingress controller log (0) | 2020.02.13 |