minikube start --driver=none 환경으로 실행하기 위한 방법

driver=none 방식은 vm 같은곳에 설치하게되면 클러스터 접속을 위한 proxy가 필요없이 host의 IP로 바로 접근가능하여 k8s의 구조를 이해하는데 도움이 된다.

install minikube using docker, cri-dockerd on CentOS 9 stream

- 2024-06-07 확인

- runtime을 crio로 설치는 가능하지만 minikube addons enable시 오류 발생함

- podman 설치하면 minikube start --driver=podman --force 실행가능함

- 명령어를 minikube kubectl get po 이런식으로 하거나

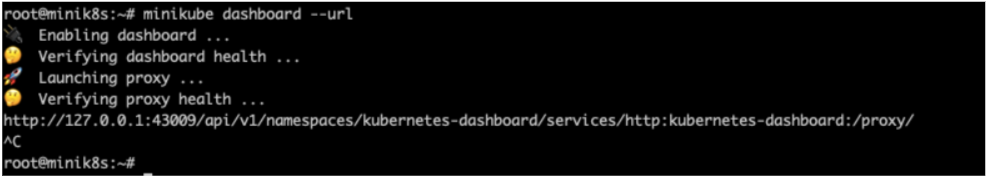

alias kubectl="minikube kubectl --"를 주면됨- 이 방식은 클러스터에 접근히기 위해서는 proxy 를 통해야함

- 명령어를 minikube kubectl get po 이런식으로 하거나

- crio를 설치하면 minikube start --driver=none --container-runtime=cri-o 시작가능함 ->

설치 순서

- dnf update

- install docker

- install crio

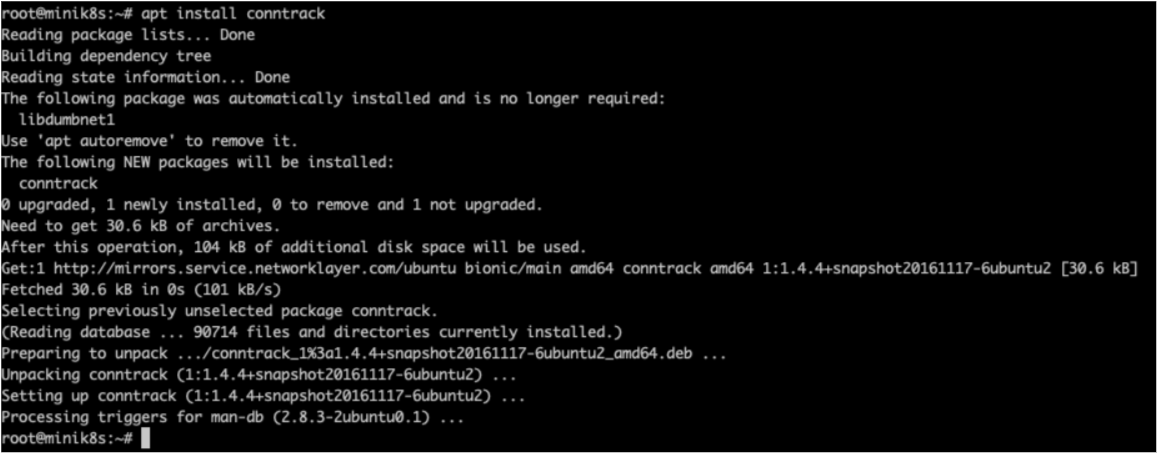

- install conntrack

- install cri-dockerd

- /etc/crictl.yaml 내용 확인

- container rumtime 위치 확인 -> "runtime-endpoint: unix:///var/run/cri-dockerd.sock"

- copy /etc/crio/crio.conf.d/10-crio.conf --> /etc/crio/crio.conf.d/02-crio.conf

- /etc/crictl.yaml 내용 확인

- install kubectl

- install minikube

- minikube start --driver=none --container-runtime=cri-o

update dnf

dnf updateinstall docker

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo systemctl start docker

install crio

curl https://raw.githubusercontent.com/cri-o/packaging/main/get | bash

systemctl status crio

systemctl start crio

crio status info

crictl info

yum install -y conntrack

cri-dockerd 설치

아래 2개 모두 download필요함

소스에는 cri-dockerd 파일이 없다.

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.14/cri-dockerd-0.3.14.amd64.tgz

wget https://github.com/Mirantis/cri-dockerd/archive/refs/tags/v0.3.14.tar.gz

tar -xvf cri-dockerd-0.3.14.amd64.tgz

install -o root -g root -m 0755 ./cri-dockerd/cri-dockerd /usr/local/bin/cri-dockerd

install -o root -g root -m 0755 ./cri-dockerd/cri-dockerd /usr/bin/cri-dockerd

tar -xvf v0.3.14.tar.gz

install ./cri-dockerd-0.3.14/packaging/systemd/* /etc/systemd/system

systemctl daemon-reload

systemctl status cri-docker

systemctl enable cri-docker.service

systemctl start cri-docker.service

# /etc/crio/crio.conf.d/10-crio.conf 파일 복사

cp /etc/crio/crio.conf.d/10-crio.conf /etc/crio/crio.conf.d/02-crio.conf

Install kubectl

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl versioninstall minikube

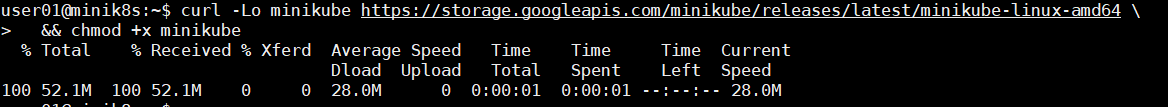

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

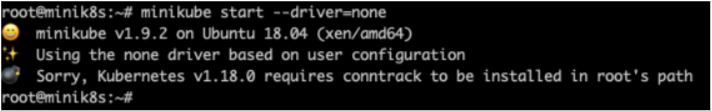

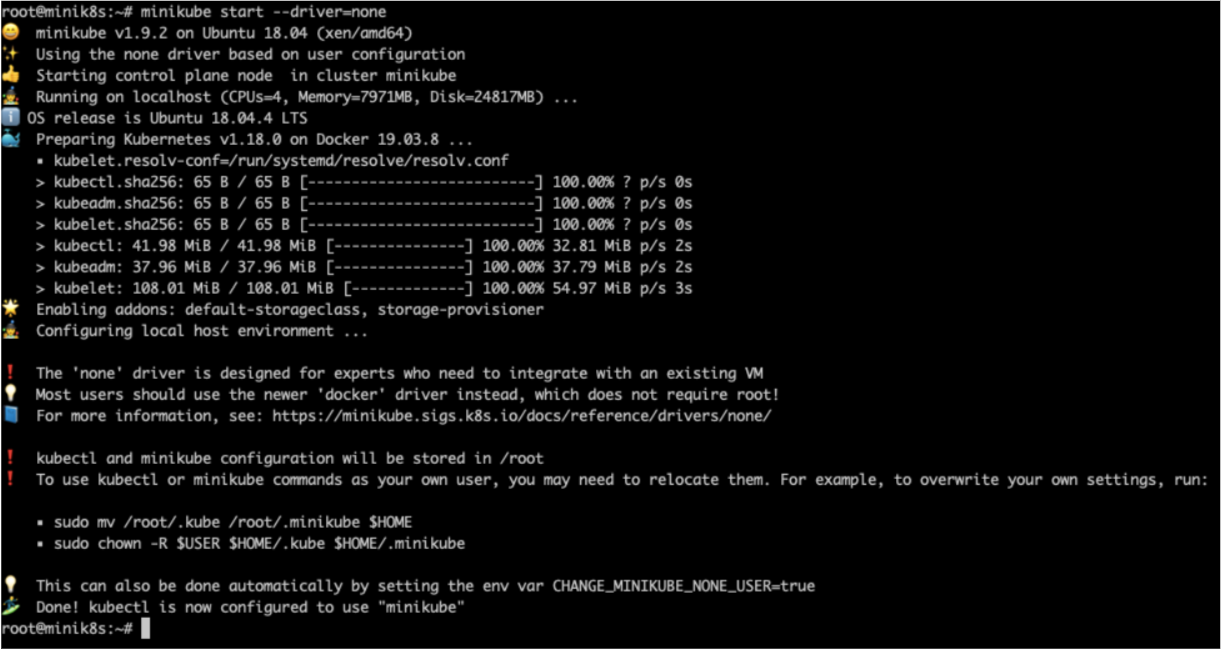

minikube start --driver=none

# minikube start --driver=none --container-runtime=cri-o --force

정상 동작확인.

- cri-dockerd 설치

$ minikube profile list |----------|-----------|---------|--------------|------|---------|---------|-------|----------------|--------------------| | Profile | VM Driver | Runtime | IP | Port | Version | Status | Nodes | Active Profile | Active Kubecontext | |----------|-----------|---------|--------------|------|---------|---------|-------|----------------|--------------------| | minikube | none | docker | 10.30.147.175 | 8443 | v1.30.0 | Running | 1 | * | * | |----------|-----------|---------|--------------|------|---------|---------|-------|----------------|--------------------|

troubleshooting

containerd 로 설정하는 방법 -> minikube에서는 안된다

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd

runtime을 docker로 사용하는 경우

cri-dockerd 설치 필수

cni-plugin 필요 없음

runtime을 containerd, cri-o로 사용하는 경우

설치는 되지만, minikube addons enable 하면 아래 오류 발생함

Exiting due to MK_ADDON_ENABLE_PAUSED: enabled failed: check paused: list paused:

❌ Exiting due to MK_ADDON_ENABLE_PAUSED: enabled failed: check paused: list paused: crictl list: sudo -s eval "crictl ps -a --quiet --label io.kubernetes.pod.namespace=kube-system": exit status 127

stdout:

stderr:

/bin/bash: line 1: crictl: command not foundcrictl 설치 오류

crictl 설치후 /etc/crictl.yaml 파일 생성 내용 추가 필요

cri-dockerd 설치후 cri-dockerd.sock 오류 나면

visudo의 default secure path 에 /usr/local/bin 추가 필요

설치시 오류 발생

이렇게 하면 minikube addons enable dashboard 에서 오류 발생함

- install docker, conntrack, minikube

- minikube start --driver=none

- crictl 필요하다고 오류남. GUEST_MISSING_CONNTRACK -> conntrack은 설치했지만 오류가 난다?

- cni plugin 설치후 crictl info에서 오류 보임 /etc/cni/net.d/에 파일이 없다고

- minikube start --driver=none 하면 cri-docerd가 필요하다고 실행 실패

- containerd 를 기본으로 설정하고, crictl.yaml에 runtime-endpoint: unix:///var/run/containerd/containerd.sock 설정하고 minikube start --driver=none --container-runtime=containerd 해도 -> crictl 없다고 오류 발생

- install crio -> minikube start 헤도 같은 crictl 오류남

- crictl.yaml 내용을 crio.sock으로 변경

- minikube start --driver=none --container-runtime=cri-o -> 실행됨 addons enable 하면 오류남

instll cni-plugin

없어도 된다

CNI_PLUGIN_VERSION="<version_here>"

CNI_PLUGIN_VERSION="v1.5.0"

# change arch if not on amd64

CNI_PLUGIN_TAR="cni-plugins-linux-amd64-$CNI_PLUGIN_VERSION.tgz"

CNI_PLUGIN_INSTALL_DIR="/opt/cni/bin"

curl -LO "https://github.com/containernetworking/plugins/releases/download/$CNI_PLUGIN_VERSION/$CNI_PLUGIN_TAR"

sudo mkdir -p "$CNI_PLUGIN_INSTALL_DIR"

sudo tar -xf "$CNI_PLUGIN_TAR" -C "$CNI_PLUGIN_INSTALL_DIR"

rm "$CNI_PLUGIN_TAR"

# 설치후 crictl info 하면 아래 오류 보임

"lastCNILoadStatus": "cni config load failed: no network config found in /etc/cni/net.d: cni plugin not initialized: failed to load cni config",

"lastCNILoadStatus.default": "cni config load failed: no network config found in /etc/cni/net.d: cni plugin not initialized: failed to load cni config"

'kubernetes' 카테고리의 다른 글

| install containerd-based k8s using Ansible (0) | 2023.03.10 |

|---|---|

| minikube on ubuntu (0) | 2020.04.17 |

| POD 과 local machine 간 file copy (0) | 2020.03.13 |

| ingress controller log (0) | 2020.02.13 |